School Item Analysis (Non Writing)

The School Item Analysis report compares a school's performance against each NAPLAN item to other State schools. The report allows schools to analyse their NAPLAN performance in each item and compare this to the performance of students in the same item.

The report covers the domains of Reading, Spelling, Grammar & Punctuation, and Numeracy. Writing results are presented in a separate report.

This report allows teachers and leaders to identify strengths and areas for development as well as to analyse the performance of classes and individual students for each item of the assessment.

How to use this report

Use the slicers provided to filter and further analyse the data.

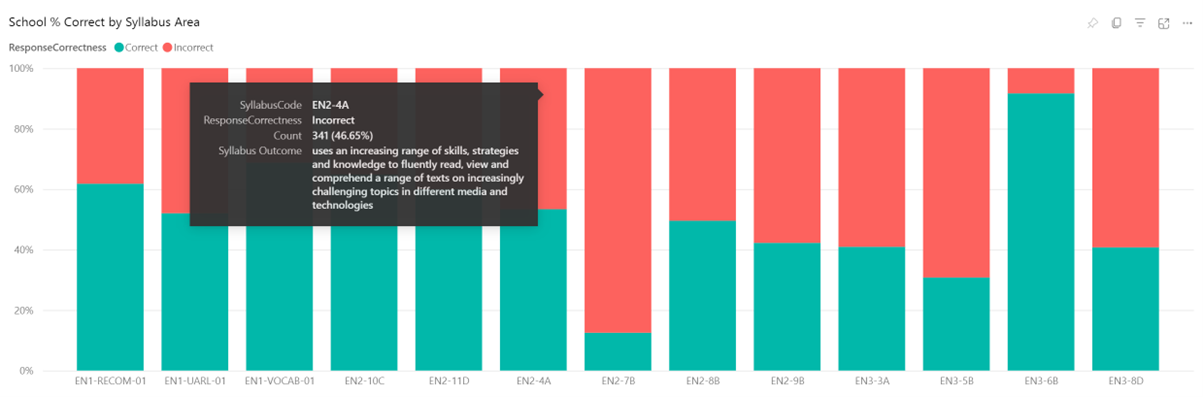

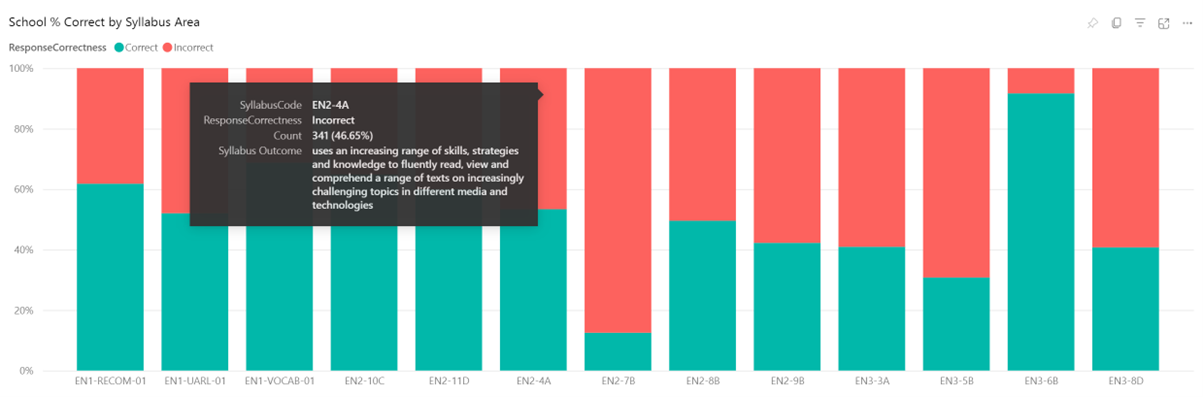

School % Correct by Syllabus Area

This chart displays the percentage of response correctness by syllabus area.

Hovering over the chart displays the Syllabus Code, Response Correctness, Count and the Syllabus Outcome.

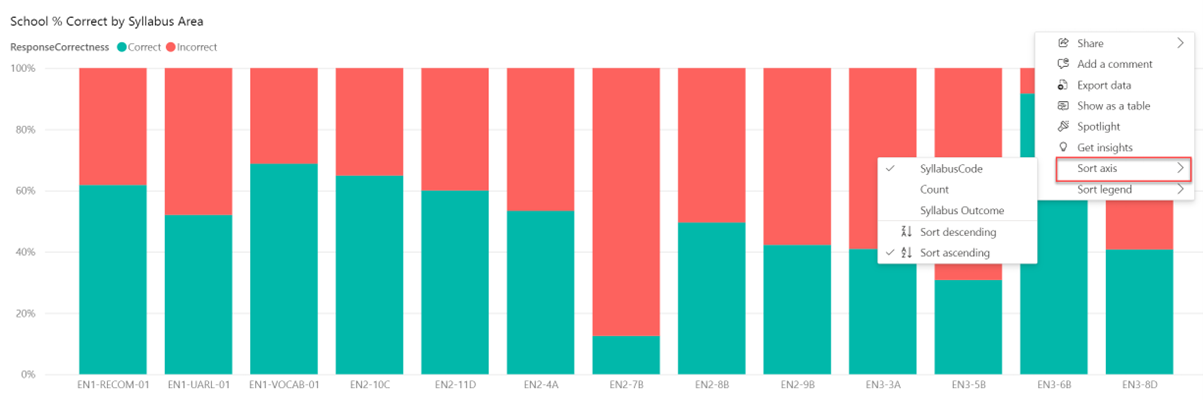

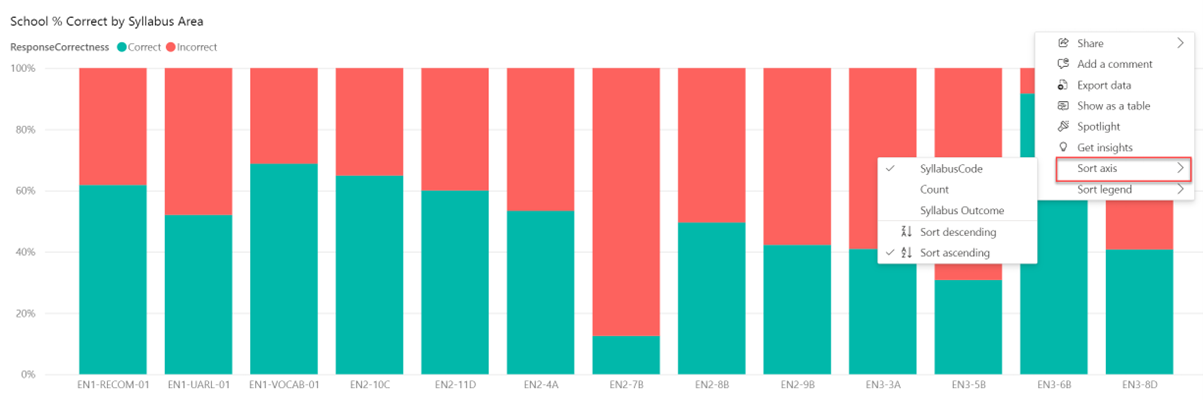

The chart is sorted by syllabus code, but can be changed by selecting the ellipsis (...) and then selecting 'Sort axis'. This allows users to sort by count, syllabus outcome, or descending or ascending order.

Tips for analysis:

- Which outcome(s) had the highest count? What proportion of the outcome(s) was answered correctly and incorrectly?

- Use the cross-highlighting feature to better understand the item descriptors. To cross-highlight, select the syllabus outcome and then focus on the reduced list of item descriptors. Look for patterns across the item descriptors. Does anything stand out? Does anything need further investigation?

Item Analysis – School compared to State

This chart compares the percentage of correct responses between the school and State for each item.

Hover over the chart to see the question code, school correct %, item difficulty, and the total participation for the school and the State.

Tips for analysis:

- Use the ellipsis to sort the data based on participation by students. Select 'Sort axis', then select total participation and sort by descending order. Data will now be presented with the most exposed items on the left-hand side.

- Consider items with a higher percentage correct and a lower percentage correct. Used alongside other data sources, this information may suggest areas of strength and areas for development for cohorts.

- Use the cross-highlighting feature by selecting the item. This will provide you with students who answered both correctly and incorrectly on the item.

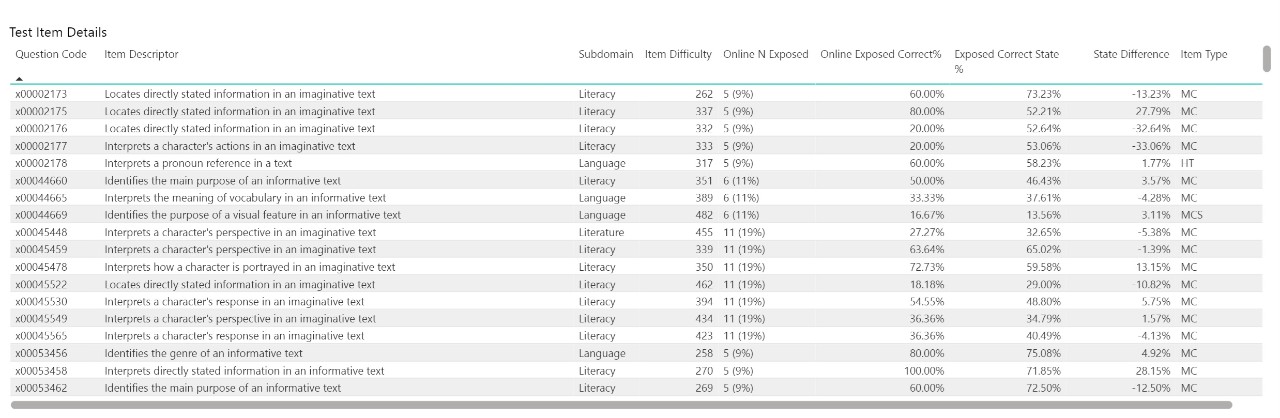

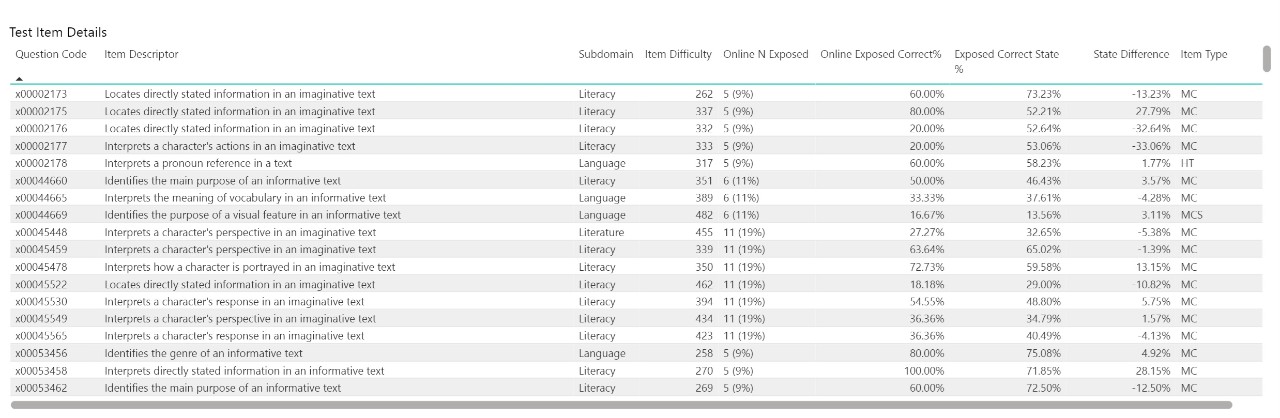

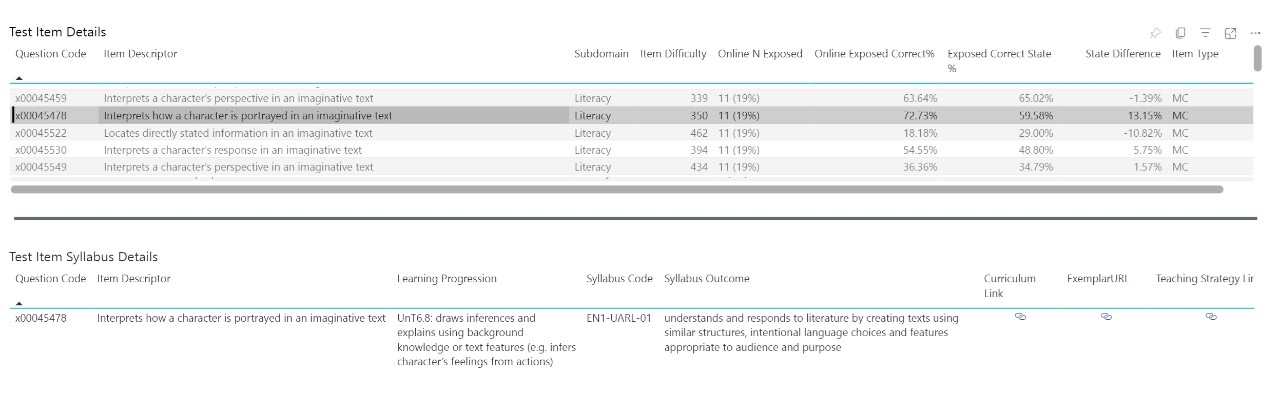

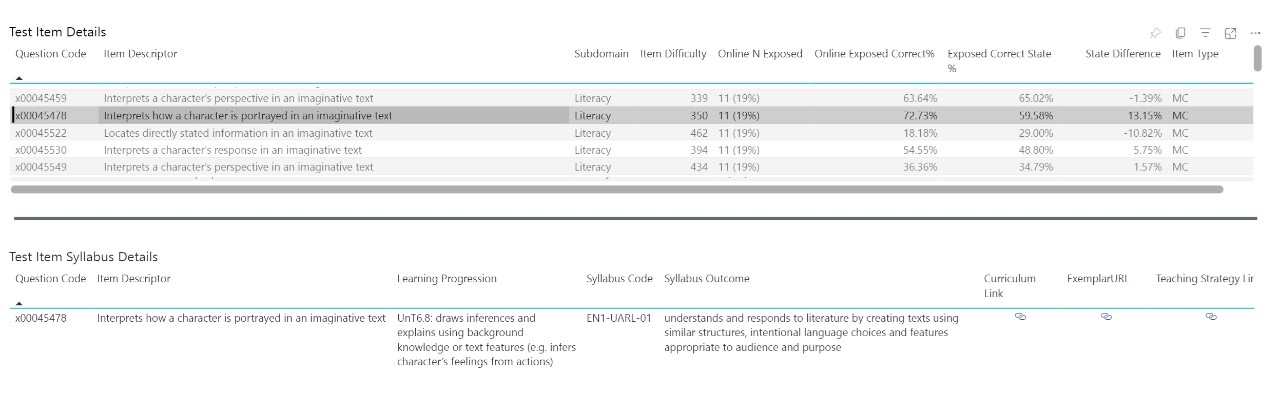

Test Item Details

This table displays each item/question in the selected assessment and domain.

Information can be sorted by question code, item descriptor, subdomain, item difficulty, online number exposed, online exposed correct %, exposed correct state %, State difference and item type.

The Item Descriptor shown is not the actual item/question text but is the outcome expected of that item.

Tips for analysis:

- Hover over any column header until you see a triangle. This means you can sort data into ascending or descending order. Select the header to sort.

- Sort by Item Descriptor. This will group items based on the first word and allows users to see clusters of similar items.

- Sort by Online N Exposed, but be cautious about drawing conclusions for items where only a small number of students were exposed.

- Sort by State Difference. A positive difference may suggest an area of strength. A negative difference may suggest an area for development.

- Sort by Item Type to better understand the types of questions students are exposed to.

Select an Item descriptor to show additional information, including the learning progression, syllabus outcome, and links to the Australian curriculum and exemplar.

To assist in organising items, items have been grouped into skillsets by item descriptor.

Note: Since items with the same item descriptor may have had a range of levels of item difficulty, skillsets may also cover a range of content complexity.

Tips for analysis:

- Identify areas of strength and areas for development for students within a class. This may assist teachers to modify, adapt or incorporate additional teaching practices to support the development of specific skills for different groups of students.

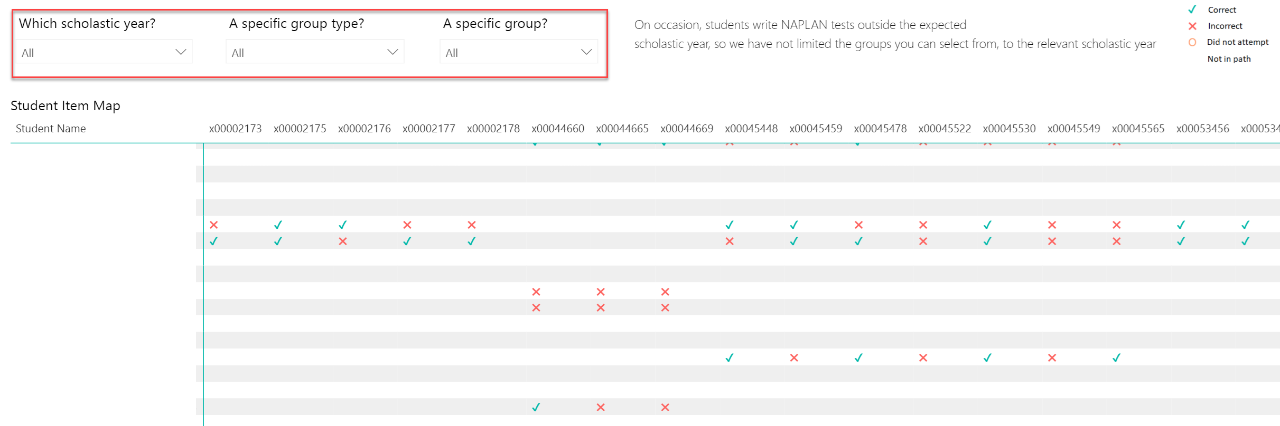

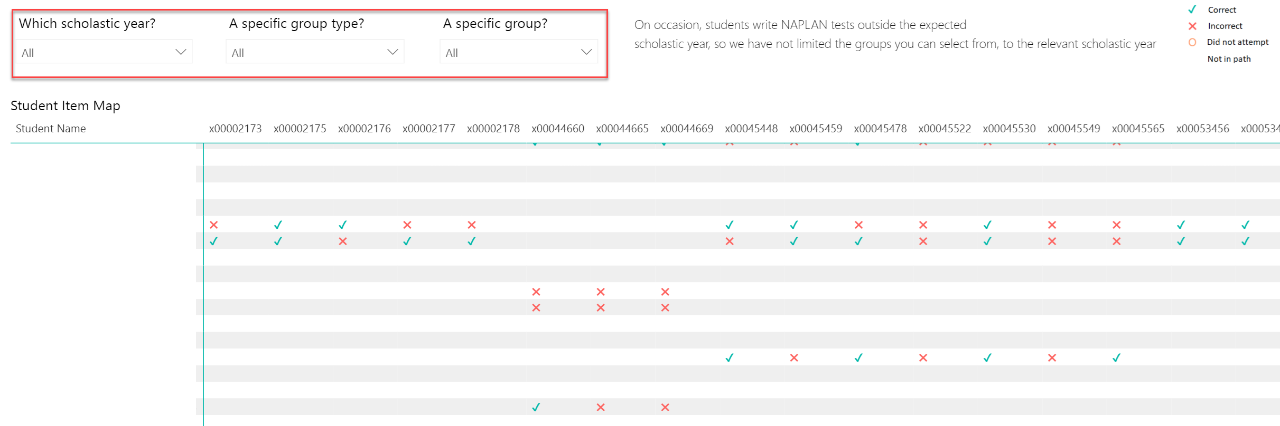

Student Item Map

This table displays responses to questions for individual students. A correct response is shown as a green tick, an incorrect response as a red cross and a non-attempt as a red circle.

You can use the Scholastic year slicer to choose a scholastic year, however the specific group slicers are not available until after November 2023.

Tips for analysis:

- Look for patterns and trends across items for students in a specific group. Are there items where a considerable number of students were exposed? Are there items where students consistently answered correctly or incorrectly?

- Identify students or questions where there were non-attempts.