Understanding the needs of your class and individual students

For: Teachers

Scout provides several information reports which can support decisions relating to improving student literacy and numeracy. These reports support teachers to plan teaching and learning activities that meet the needs of individual students in their class.

This Scout in Practice focuses on how users can use both the Class report and the Student summary report (including student item analysis) to identify areas of strength and areas for development for classes and for individual students.

To support the identification of focus areas across literacy and numeracy, we recommend using a range of Scout data alongside internal data available to schools. This should also include School item analysis.

Class report

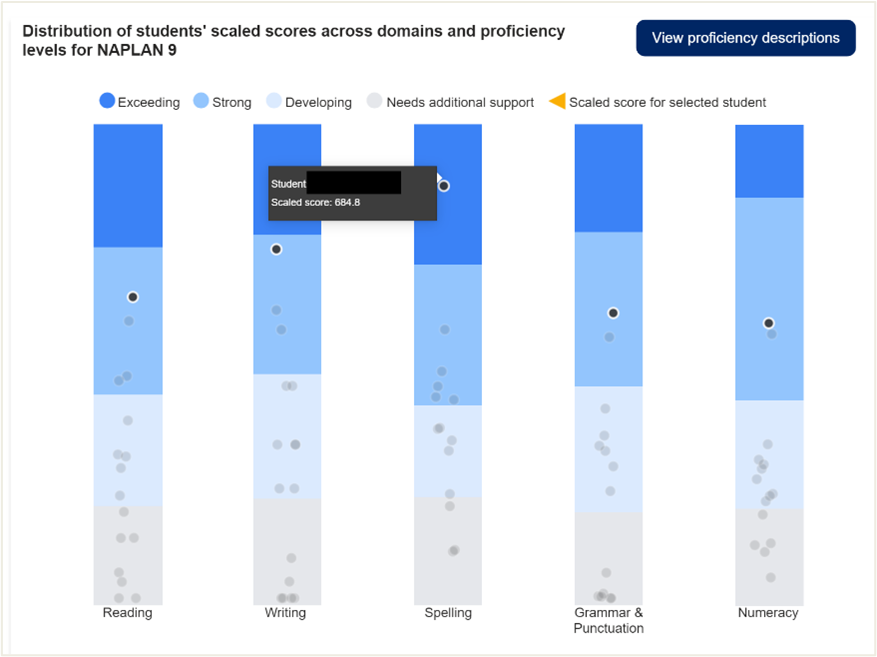

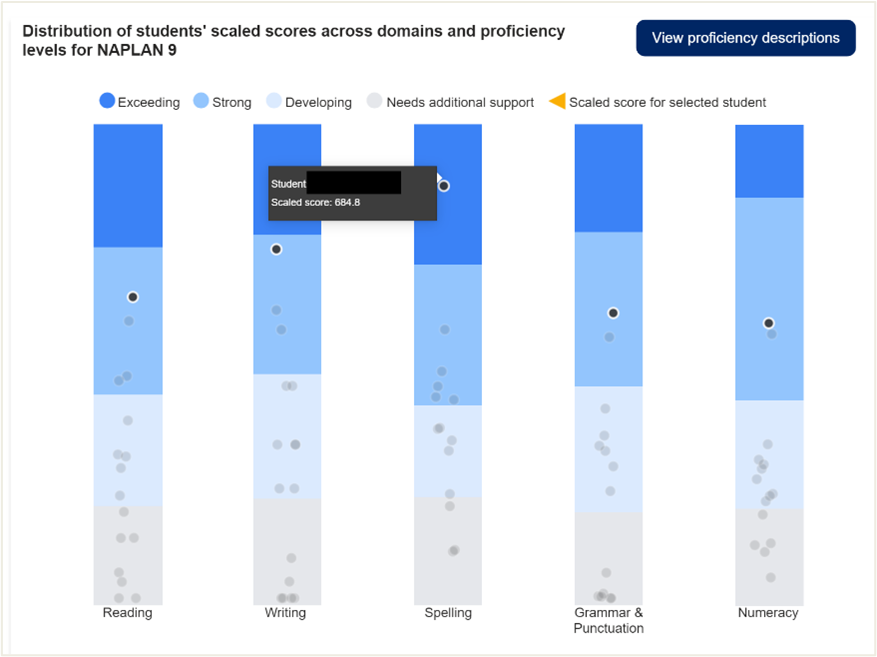

The Class report shows the distribution of students’ scaled scores across domains and proficiency levels for NAPLAN 3, 5, 7 and 9. A student list with count of domains in selected proficiency levels is provided. Users can focus on data for individual timetabled classes as well as focus on the achievement of different equity groups.

Scenario

A classroom teacher would like to better understand what proficiency levels students are working at across NAPLAN literacy domains. In addition, the teacher would like to investigate what specific areas within reading might need addressing for both the whole class, as well as for individual students who may require extension as well as those who require additional support to meet expectations.

Key question: What proficiency levels are students in my class achieving?

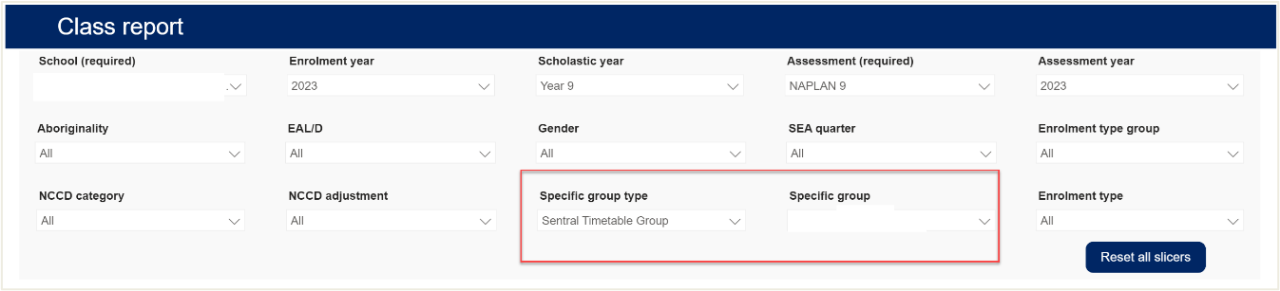

Open the Class report. Select the following slicers:

- School

- Enrolment year

- Scholastic year

- Assessment

- Assessment year

- Specific group type (this allows users to select a range of groups including Sentral timetabled groups)

- Specific group (this allows users to select individual classes or groups)

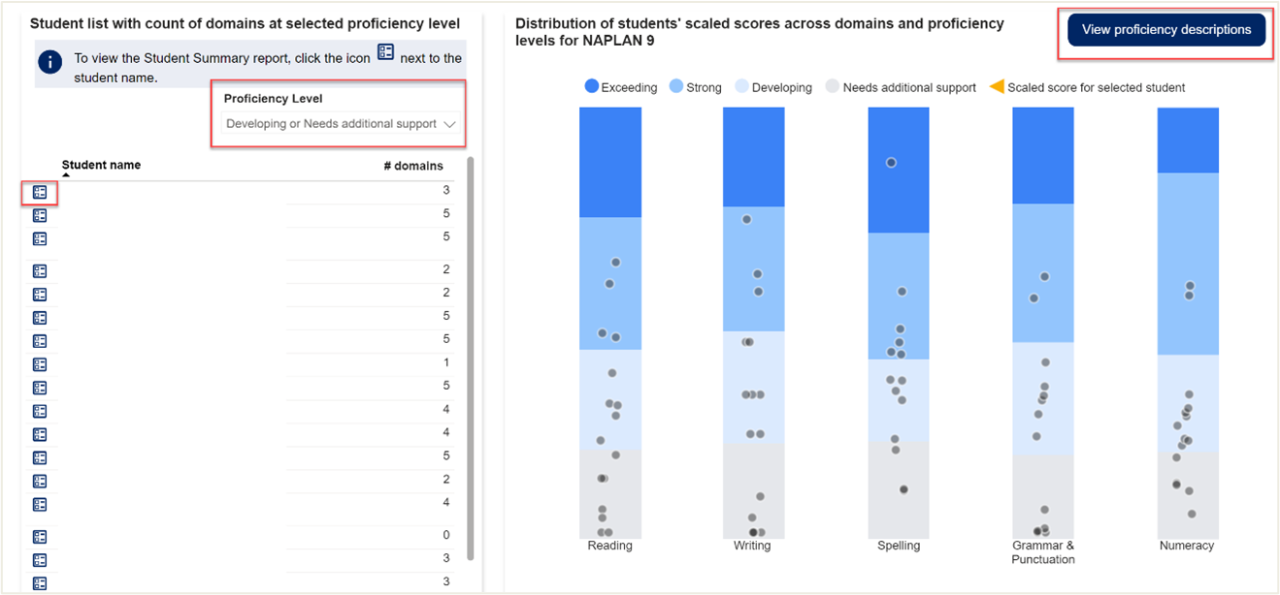

On the left users will see a list of student names. Change the Proficiency Level slicer as needed. In the example below, the Proficiency Level slicer is Developing / Needs additional support. Students are listed alphabetically by default with the number of domains at that level showing. Users can also select the icon next to the student’s name to view the Student summary report. Users can select an individual student, which will be highlighted across the 5 domain bars.

On the right, users can view the distribution of students in the selected class. Users can hover over dots to see the student’s name and scaled score. Clicking the dot will cross highlight that student across all reports.

Consider:

- Which students are exceeding expectations and might need extension?

- Which students are at the developing and needs additional support proficiency levels? What additional support might they need?

- Is there consistency across all domains?

- Identify students who are close to the border of two proficiency levels. What could be implemented to ensure they continue to make progress?

- Are any results a surprise? Which results require further investigation?

Student summary report

The Student summary report allows users to view the achievement of one student across all domains. There are three separate reports available for users:

- Student summary

- Student item analysis

- Student item analysis (writing)

Key question: I have listed which students are at the ‘strong’ proficiency level. How can I understand what areas individual students need to further develop?

Open the Student summary report. Go to the Student item analysis report. Select the following slicers:

- School

- Assessment

- Enrolment year

- Scholastic year

- Specific group type

- Domain

Use the Student slicer to select the student.

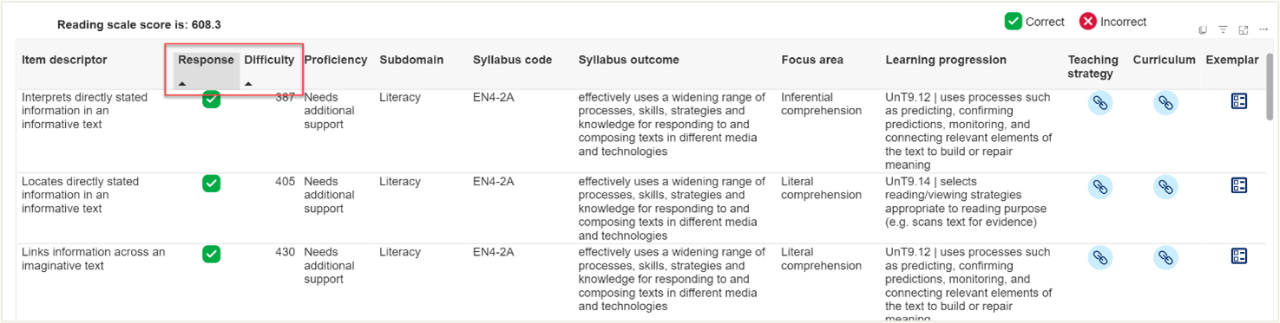

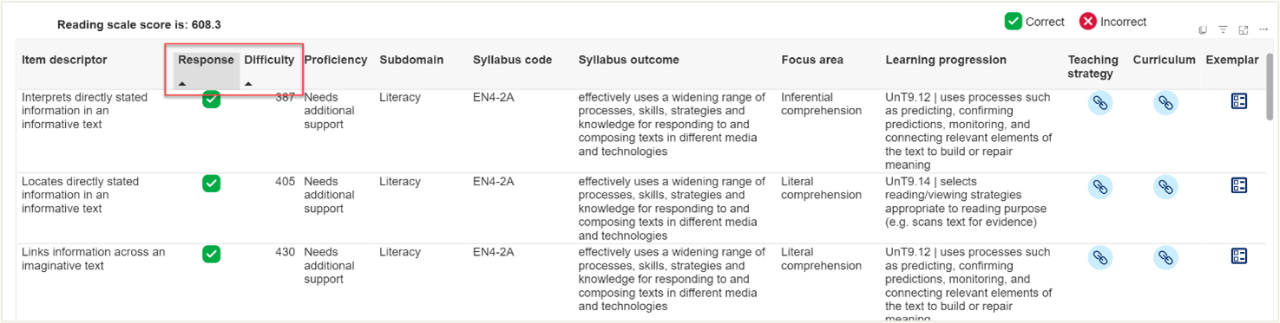

Hover over any column header where you see a triangle to sort data.

There are multiple ways of looking at this data. Some of the more common ways a user may wish to analyse this data include:

- Grouping items that are similar. Hover over the item descriptor column and click to sort items based on the first word.

- Correct or incorrect responses. Hover over the Response column and click to group correct and incorrect responses.

- The difficulty of the items. Hover over the Difficulty column and click once to sort from lowest to highest.

- Syllabus code. Hover over the Syllabus code column to sort in numerical order.

- Focus area. Hover over the Focus area column and click once to sort based on the first word.

The next steps provide one approach to looking at data. It is recommended that users utilise a variety of approaches to understand the strengths and areas for development for individual students.

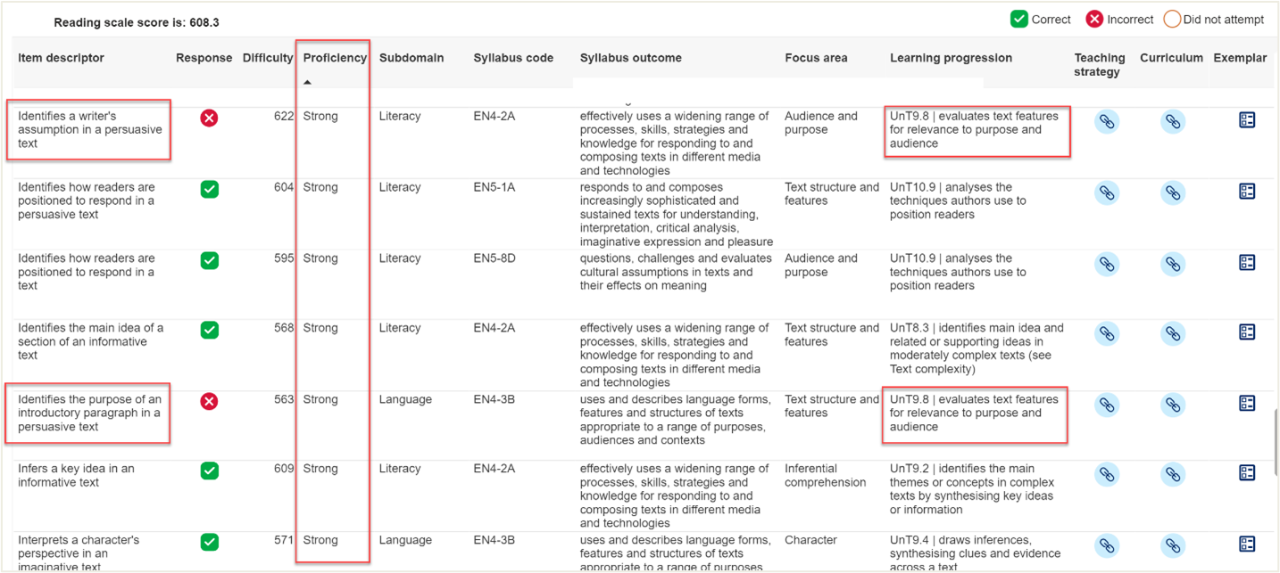

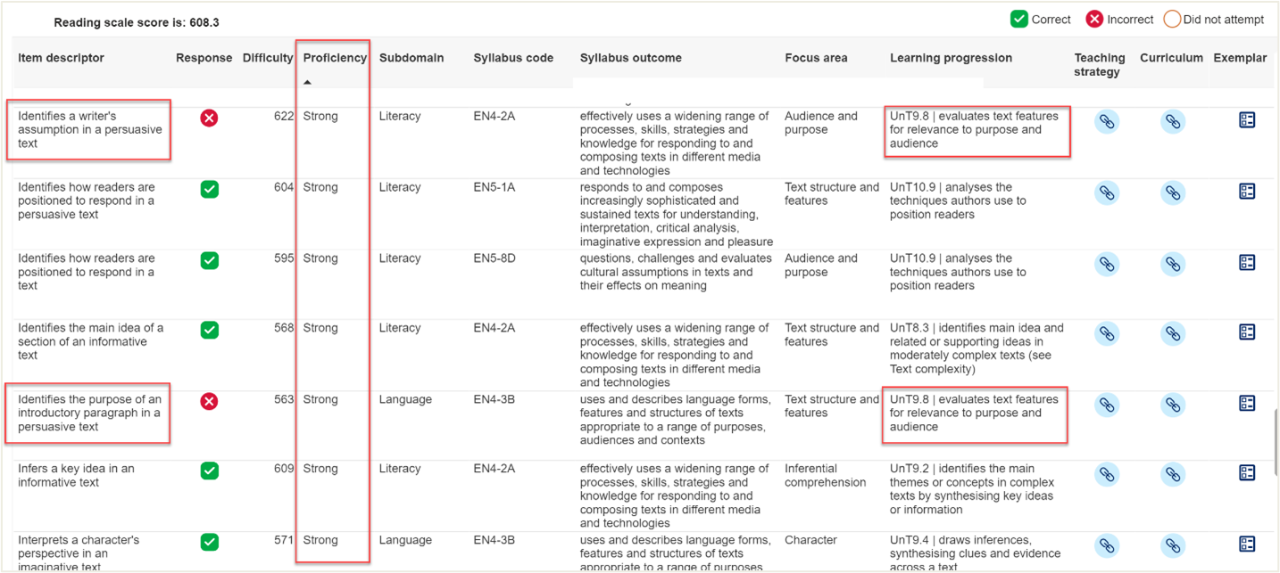

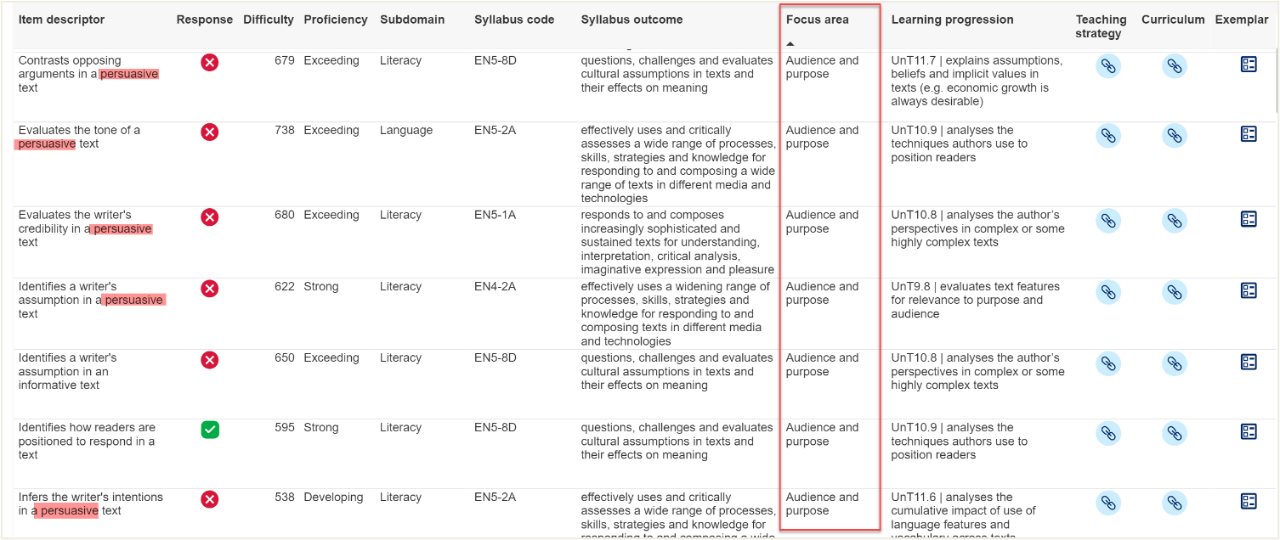

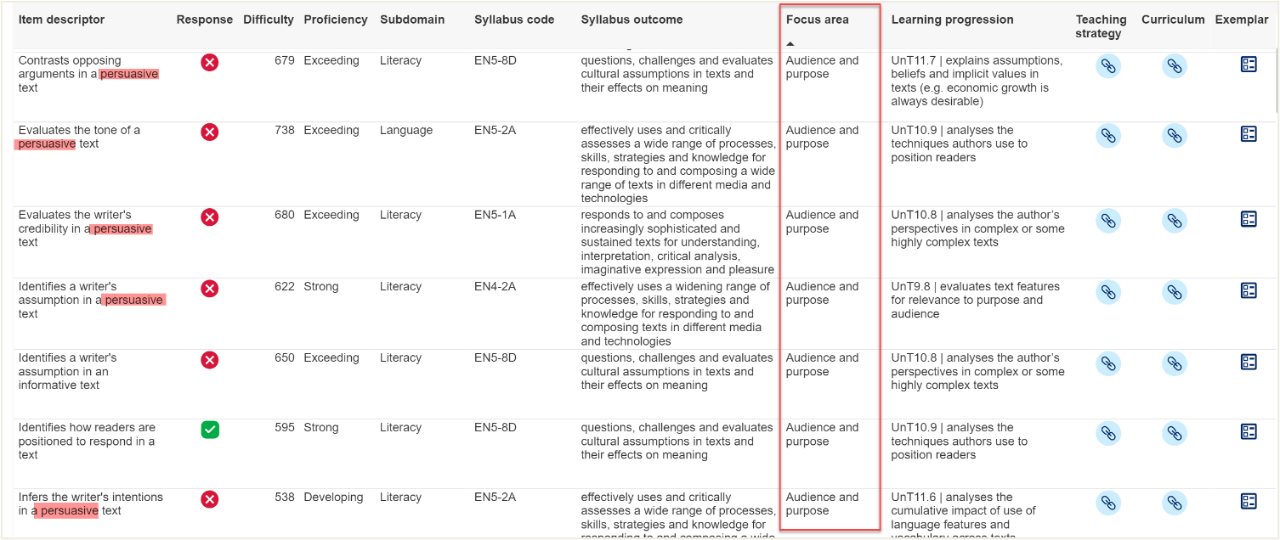

In the example below, the user starts by sorting data based on Proficiency. As the student is working at the ‘strong’ proficiency level, the user starts the investigation by looking to see if there are patterns in what the student scored incorrectly at this level. The user notes that the two incorrect items both related to the skill of ‘identifies’ and both relate to persuasive texts. The other similarity noted is the link to the same learning progression.

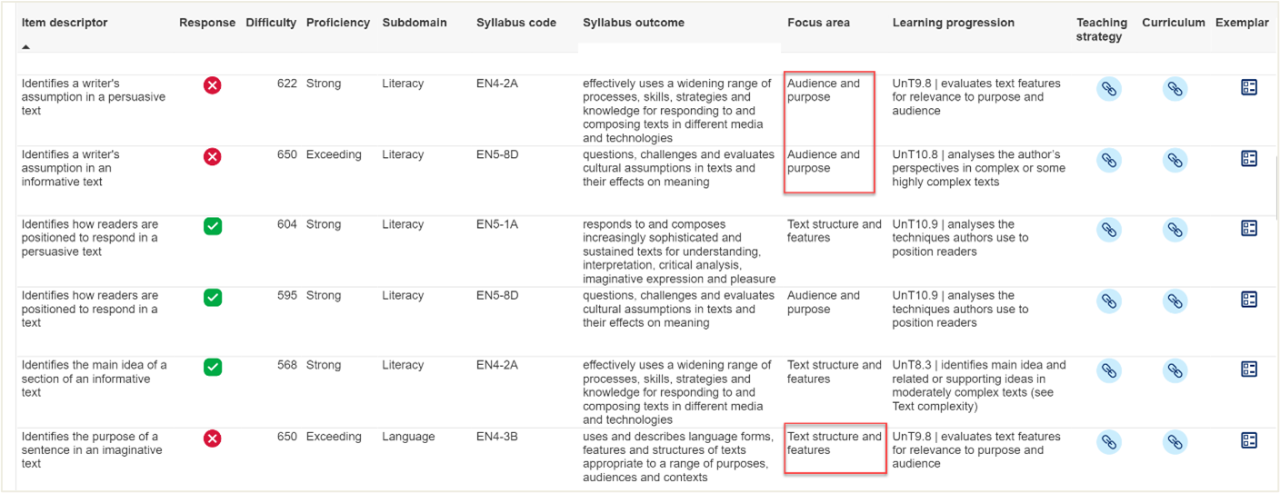

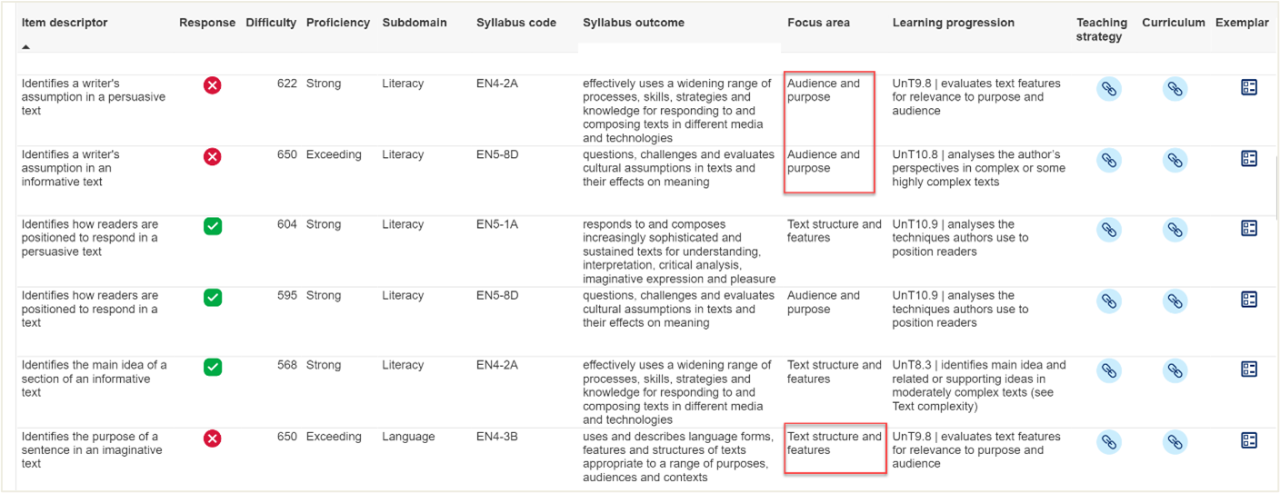

Next, the user sorts by item descriptor to see what other ‘identify’ questions the student may be finding challenging. Of the 8 items, 5 are incorrect. The user looks for patterns across the incorrect items and notes ‘Audience and purpose’ and ‘Text structures and features’ as similarities. The user also looks at the text type and syllabus codes but finds no other similarities.

Next, the user sorts by Focus area to investigate if there are other items related to this that the student also answered incorrectly. The user notes that the student has a high number of incorrect responses. Some similarities across items noted are the type of text. The user also looks at the syllabus outcomes and learning progression indicators and notes that it appears this student may find it challenging to understand and analyse the author's perspectives and techniques used, particularly in texts of increasing complexity.

The user can utilise the Teaching strategy link for resources to support teaching and learning activities in the classroom.

Consider:

- Keep your analysis balanced and focus on areas where the student is working well and areas where they may need to develop.

- Consider performance in previous NAPLAN assessments. Has the student demonstrated expected progress?

- Utilise other sources of data to triangulate your data. Do internal assessment results, classroom observations and work samples confirm your findings?

- Are any results a surprise? Which results require further investigation?

Where to next?

It is recommended that Scout data be used in conjunction with other data sources. Triangulate findings from these reports with other available internal and external data. This could include student data from internal class and cohort assessments as well as external sources such as the Check-in Assessment. Depending on the school's context, it may also be relevant to look at attendance and engagement data alongside student performance data.

It may be useful to focus on the School item analysis report to identify whether areas of strength and areas for development are the same for all students across the cohort or whether there are particular areas unique to one class.

Qualitative data sources, including document analysis, observations, and focus groups, may also provide additional insight into teaching strategies and programs that are influencing student achievement