Using item analysis to understand the literacy and numeracy needs of a cohort

For: School leaders

NAPLAN provides opportunities for School Leaders to better understand the performance of their cohorts across the domains of Numeracy, Reading, Writing, Spelling, Punctuation and Grammar.

Scout provides several reports to support schools with this including the School item analysis reports. These reports support leaders to identify and monitor literacy and numeracy performance of cohorts and identified groups. This may assist school leaders to report on the progress of key literacy and numeracy initiatives in Strategic Improvement Plans.

To support the identification of focus areas across literacy and numeracy we recommend using a range of Scout data alongside internal data available to schools.

This Scout in Practice focuses on how users can use the School item analysis reports to identify areas of strength and areas for development across all NAPLAN domains for whole cohorts.

School item analysis report

The School item analysis report compares a school’s performance against each NAPLAN item to other NSW government schools (DoE State). This report allows schools to analyse their performance in each item across all NAPLAN domains. There are two reports:

- School item analysis

- School item analysis (writing).

The School item analysis report provides the following information for Reading, Spelling, Grammar & Punctuation and Numeracy:

- School % correct by syllabus area

- Test item details

- Test item syllabus details

- Student item map.

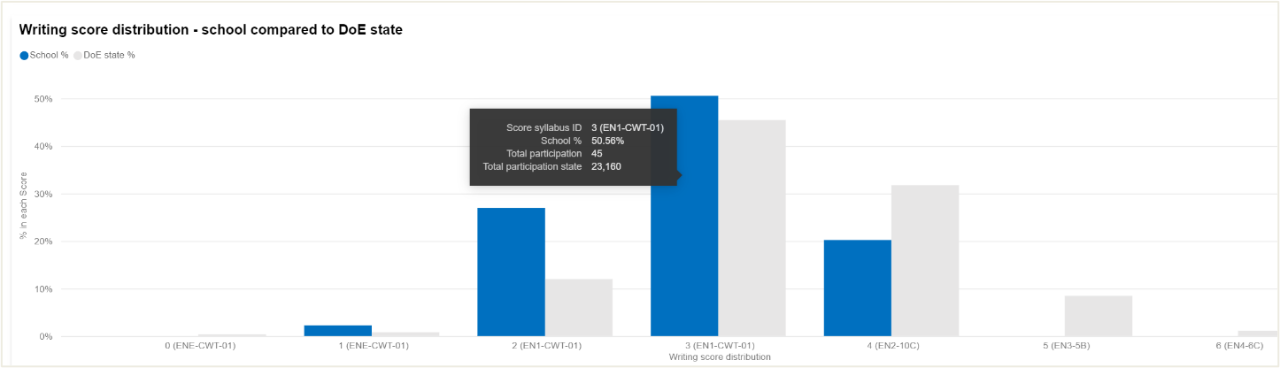

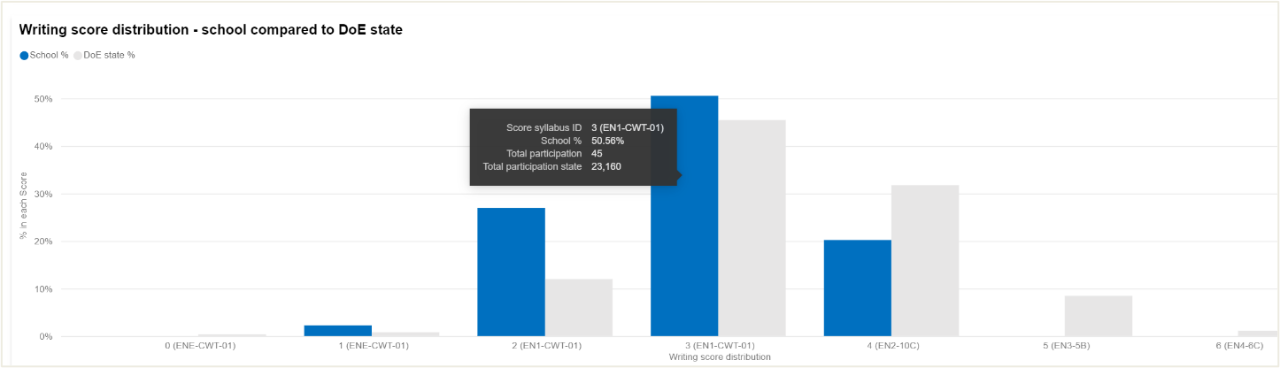

The School item analysis (writing) report provides the following information for writing only:

- Writing score distribution – school compared to DoE State

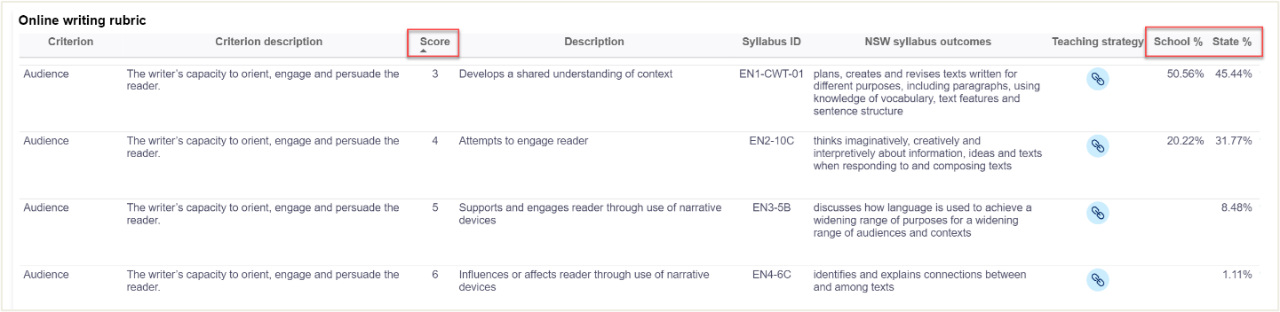

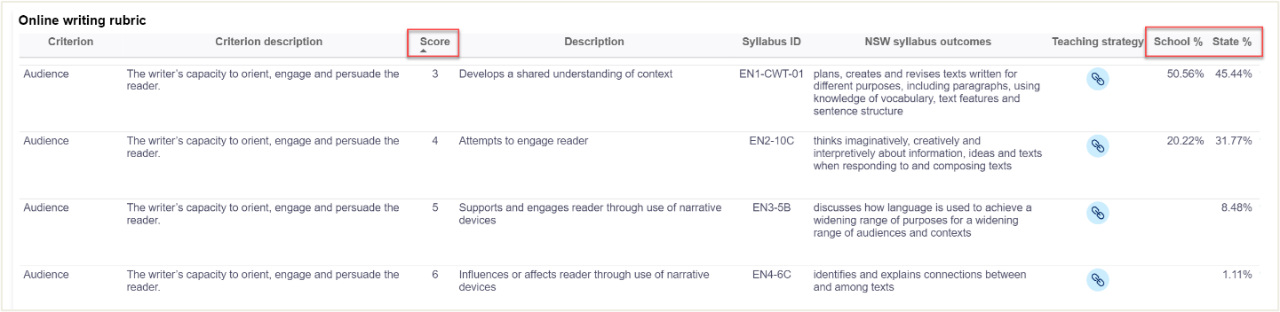

- Online writing rubric

- Student – writing response details.

Users can sort information by Specific group type and Specific group. This means both leaders and teachers can use this data to focus on Custom Scout groups, Roll Class and Sentral Timetabled groups.

Care should be taken when interpreting results when the selected cohort has a small number of students. When sample sizes are small it is difficult to make generalisations about student performance across both syllabus outcomes and test items.

Scenario

As a school leader, you are leading the analysis of NAPLAN data to identify areas of strength and areas for development across all aspects of Literacy and Numeracy. The analysis will provide the starting point for professional learning for all teachers. This information will also be used alongside internal school data to measure progress toward improvement measures in the Strategic Improvement Plan.

Focus question: What are our areas of strength and areas for development in reading?

Open the School item analysis report.

Select the following slicers:

- Assessment school

- Assessment

- Assessment year

- Domain

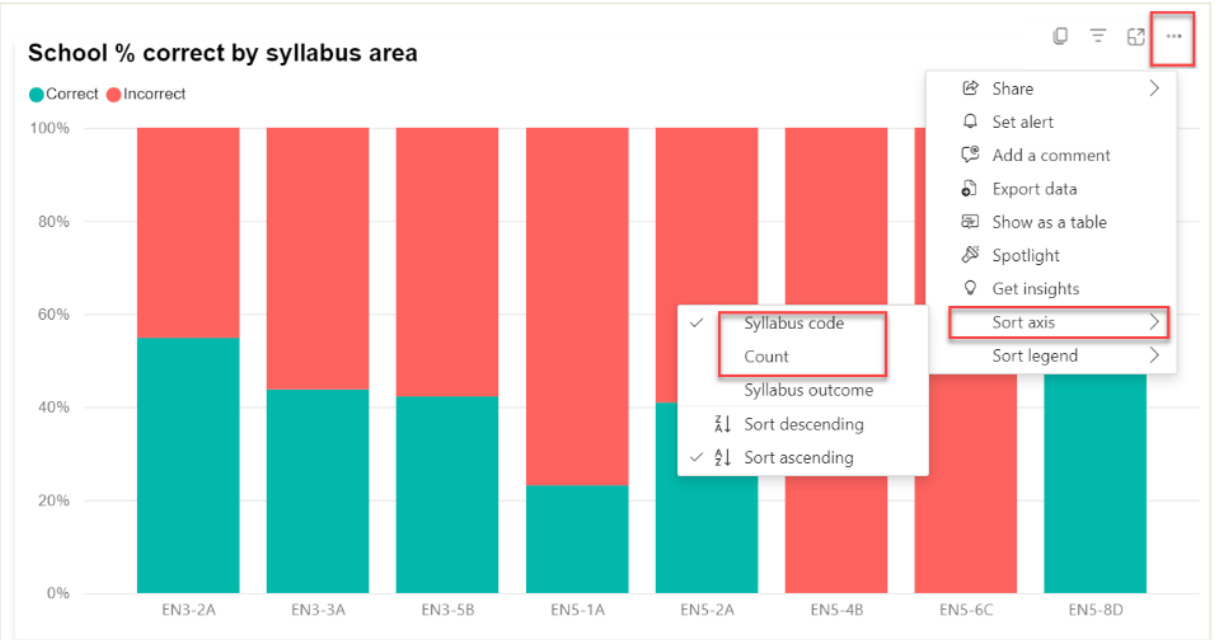

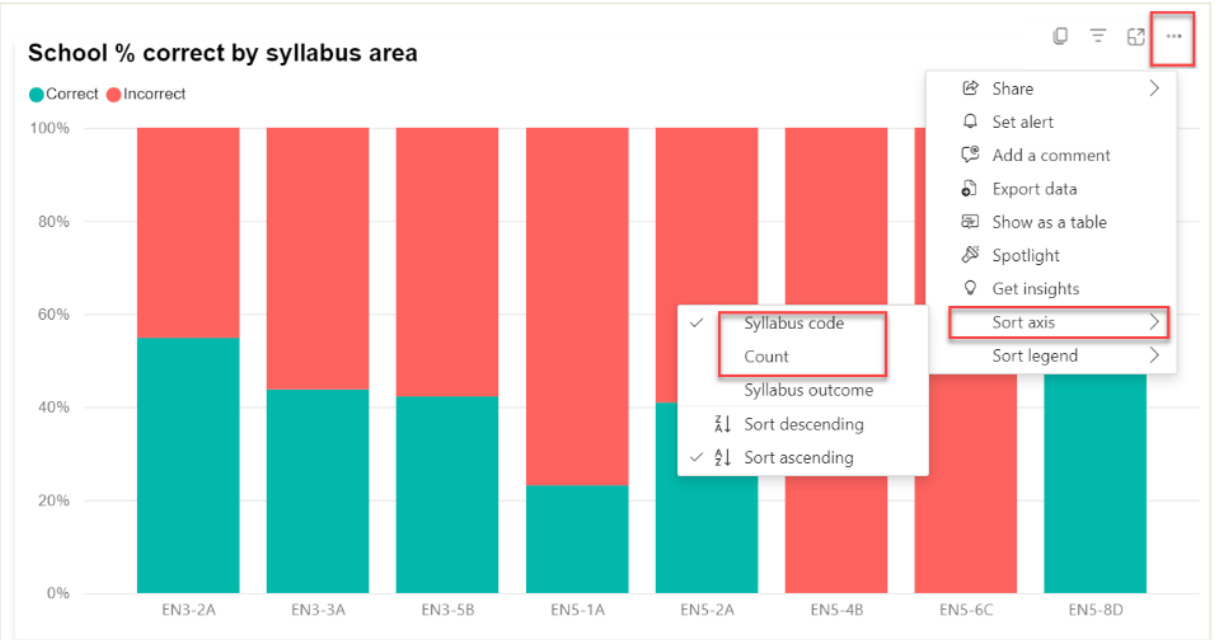

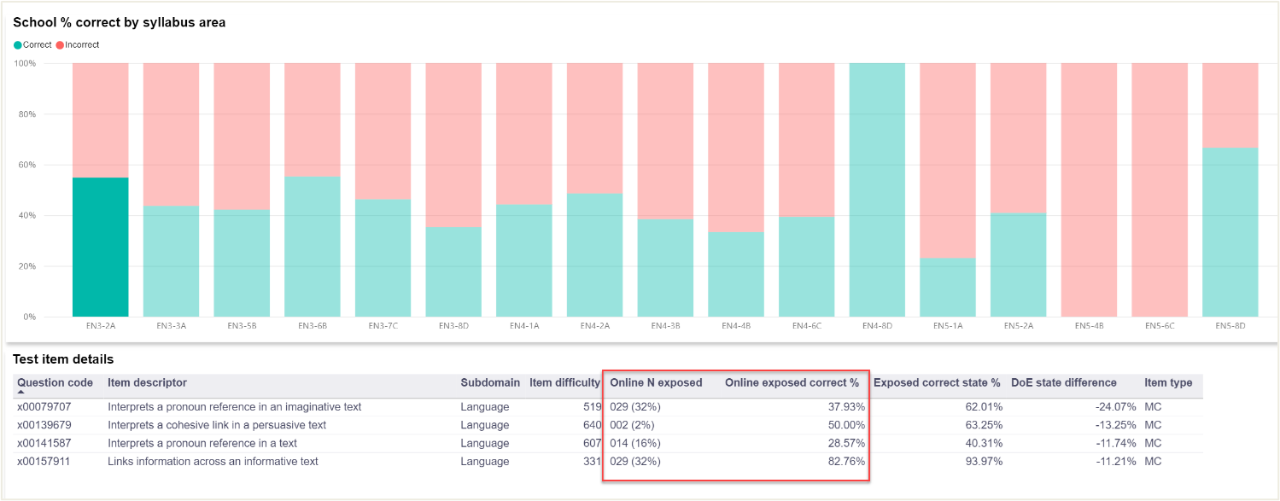

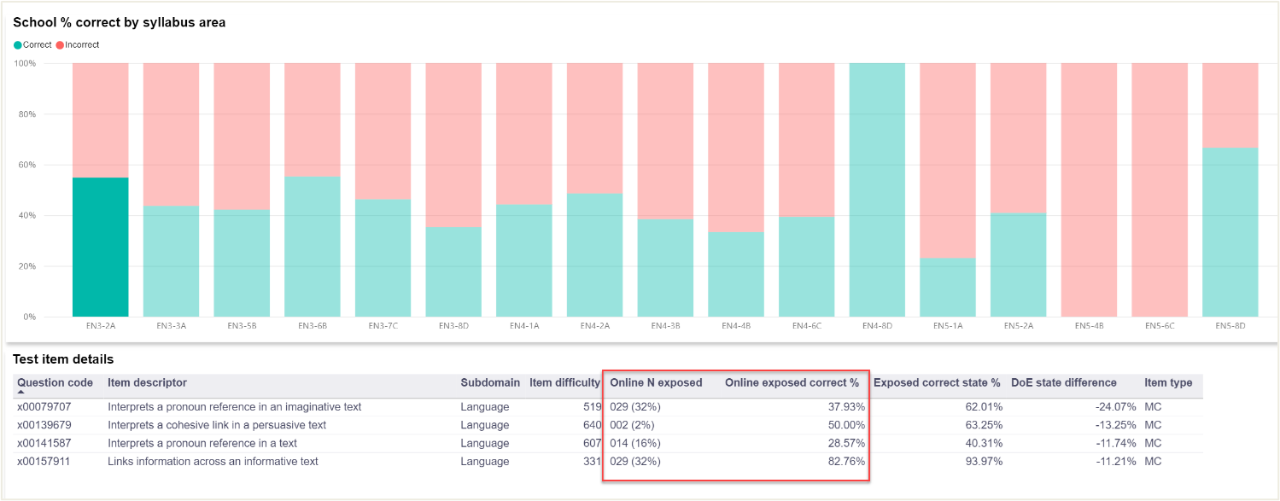

Focus first on School % correct by syllabus area. Click on the ellipsis in the top right hand corner of the chart and then select the sort axis and either syllabus code or count. This will reorder your syllabus areas.

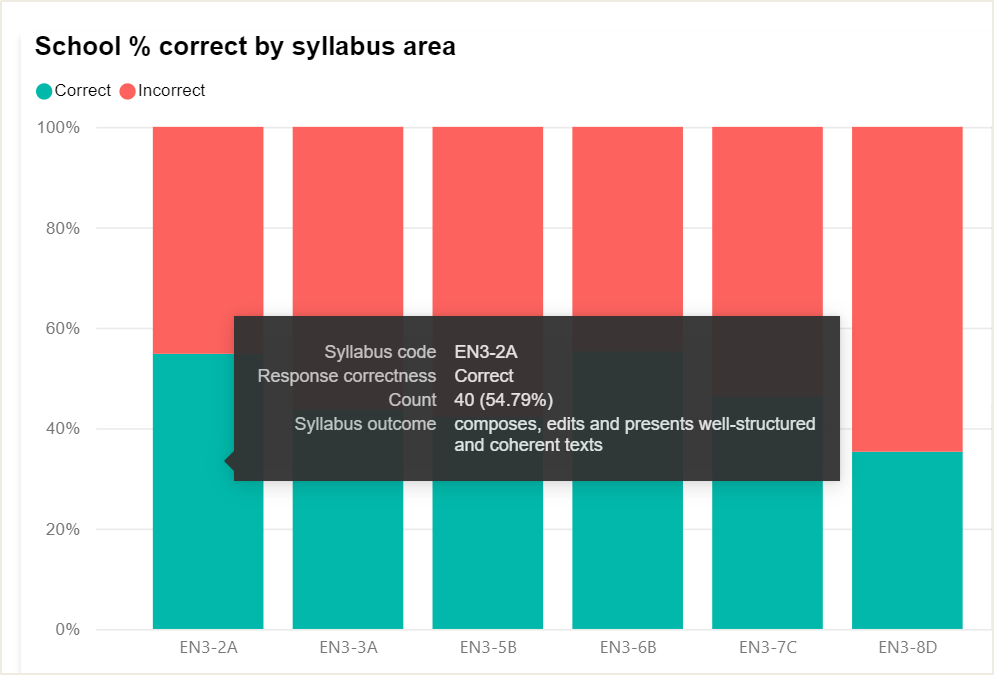

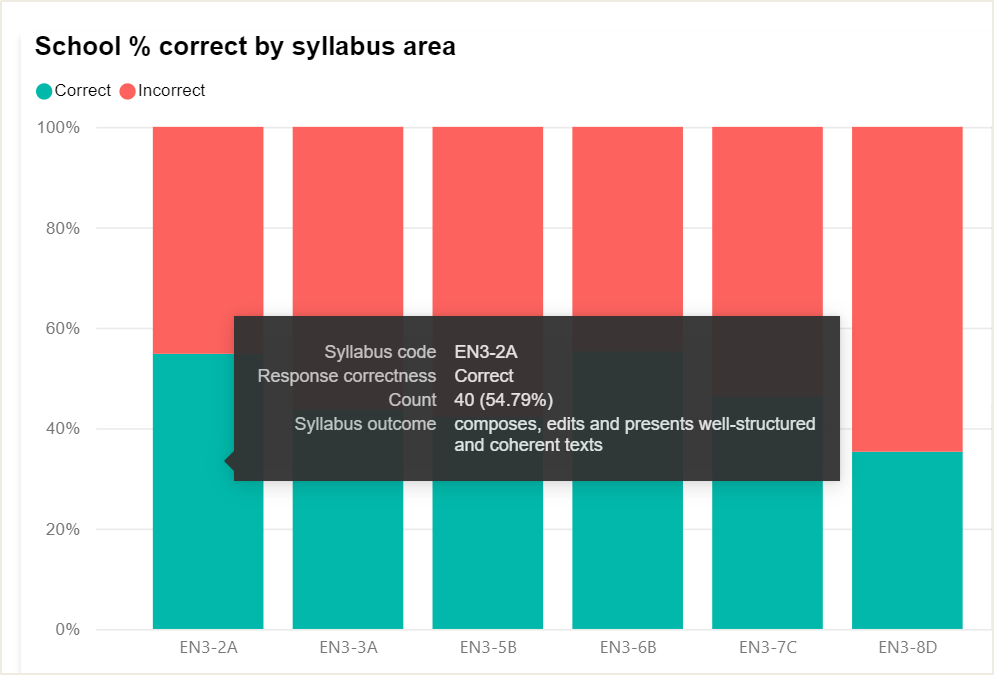

Hover over the green and red bars to see the count (the number and percentage of items answered correctly or incorrectly) as well as syllabus details. In the example below, the user hovered over the green section of EN3-2A. This showed that there were 40 correct responses for this syllabus outcome. This was 54.79% of all items for EN3-2A.

Click the syllabus bar to focus on the items associated with each syllabus outcome. This will be cross-highlighted in the Test item details table below, showing only those items associated with the selected syllabus outcome. In the example below, the user can see how many items students were exposed to, as well as the percentage correct.

Consider:

- Which syllabus outcome(s) had the most items?

- Which syllabus outcome(s) had the least items?

- Where is there a high number or percentage of correct responses?

- Where is there a high number or percentage of incorrect responses?

- Does anything jump out as needing further investigation?

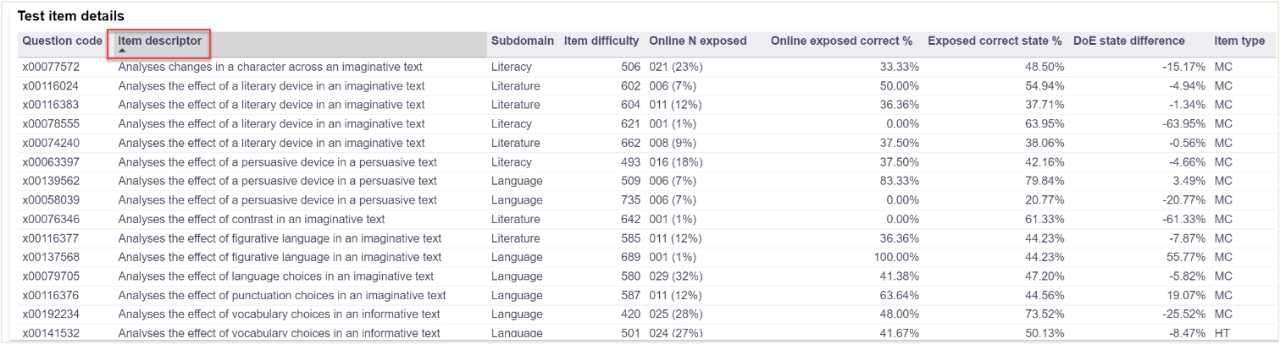

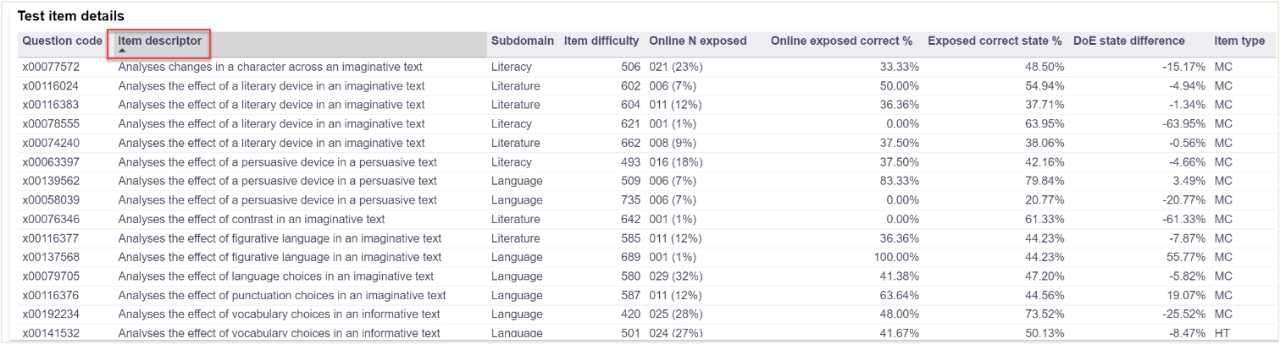

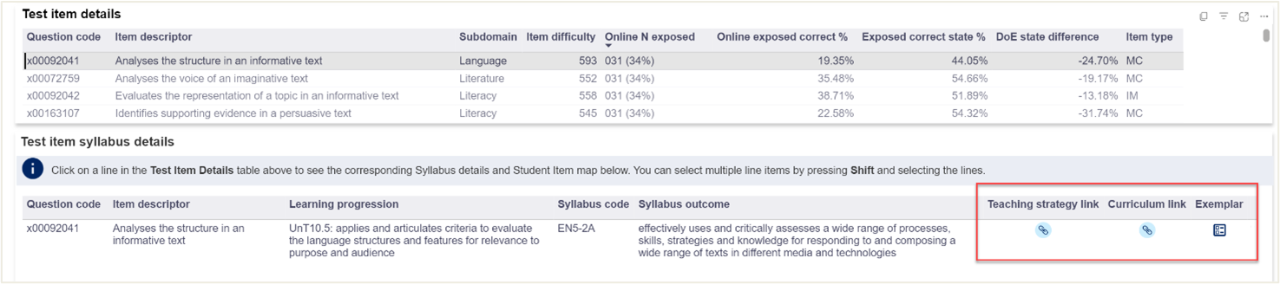

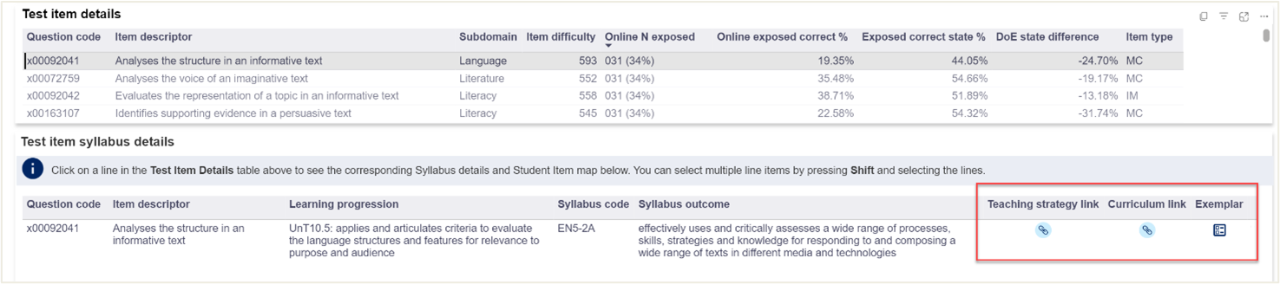

An alternative approach focuses on the Test Item Details table. Users can sort the data in this table by hovering over any column header and clicking to sort. In the example below, item descriptors have been sorted into alphabetical order. Users can also sort items by Subdomain, Item difficulty, Online N exposed, Online number correct %, Exposed state correct %, State difference and Item type.

This data can also be exported into an Excel spreadsheet. Click on the ellipsis, then select 'Export'.

Consider:

- Which test items had the highest and lowest exposure?

- Which test items had the highest correct and incorrect responses?

- Look for patterns across item descriptors. Are there particular areas where students have performed well? Are there areas where further investigation is needed?

- Look for patterns across the types of texts. Do students find a type of text more challenging than others?

- Sort data by State difference. Are there areas where the difference between the cohort and the State is significant? How many students were exposed to these items? Is it a need for the cohort or potentially only for some students?

- Sort data by item type. Do students find some types of questions easier than others?

- Does anything jump out as needing further investigation?

To drill down further into individual test items, select the item in the Test item details table. This will provide users with additional details, including the Teaching strategy link, Curriculum link and Exemplar.

Repeat for the relevant remaining domains as needed.

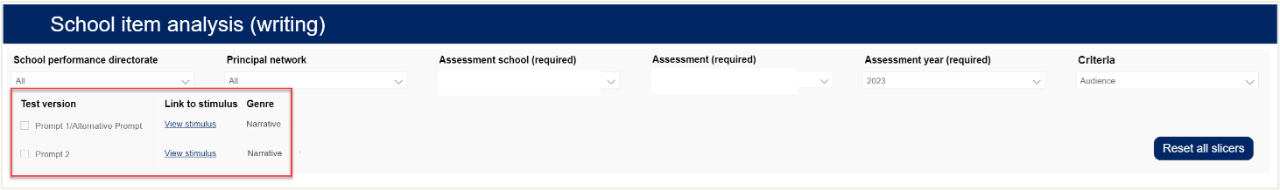

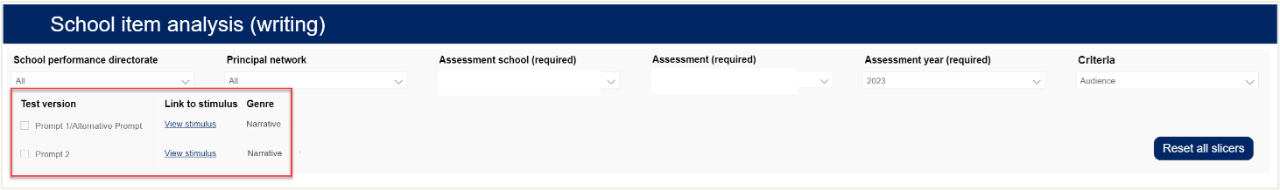

To view writing results, open the School item analysis (writing) report. Select the following slicers:

- Assessment School

- Assessment

- Assessment year

- Criteria.

Note that you can focus on different prompts. It is recommended that you look at whole cohort data without selecting a specific prompt.

Hover over each bar to understand where students in the cohort are achieving.

The Online writing rubric provides additional information. Consider where students have achieved and how this compares to the State. Repeat for each criterion to find areas of strength and areas for development.

Where to next?

It is recommended that Scout data be used in conjunction with other data sources. Triangulate findings from these reports with other available internal and external data. This could include student data from internal class and cohort assessments as well as external sources such as the Check-in Assessment. Depending on the school's context, it may also be relevant to look at attendance and engagement data alongside student performance data.

It may be useful to focus on the Cohort proficiency report to identify which proficiency levels students are working at. This may assist school leaders in drilling into the data to focus on achievement of students working at different levels.

Qualitative data sources, including document analysis, observations, and focus groups, may also provide additional insight into teaching strategies and programs that influence student achievement.