Analysing HSC results

This resource supports schools to improve their analysis and evaluation of HSC results.

Many schools analyse their HSC results on an annual basis to identify their successes, as well as areas for improvement. This helps communicate HSC successes to the community and inform future planning, including future curriculum offerings, staff professional learning and student learning support.

Suggested reports for this practice are:

- Average HSC scores vs SSSG/state scores

- HSC Results report

- School vs State (within School) report.

All reports can be found in the Scout HSC app.

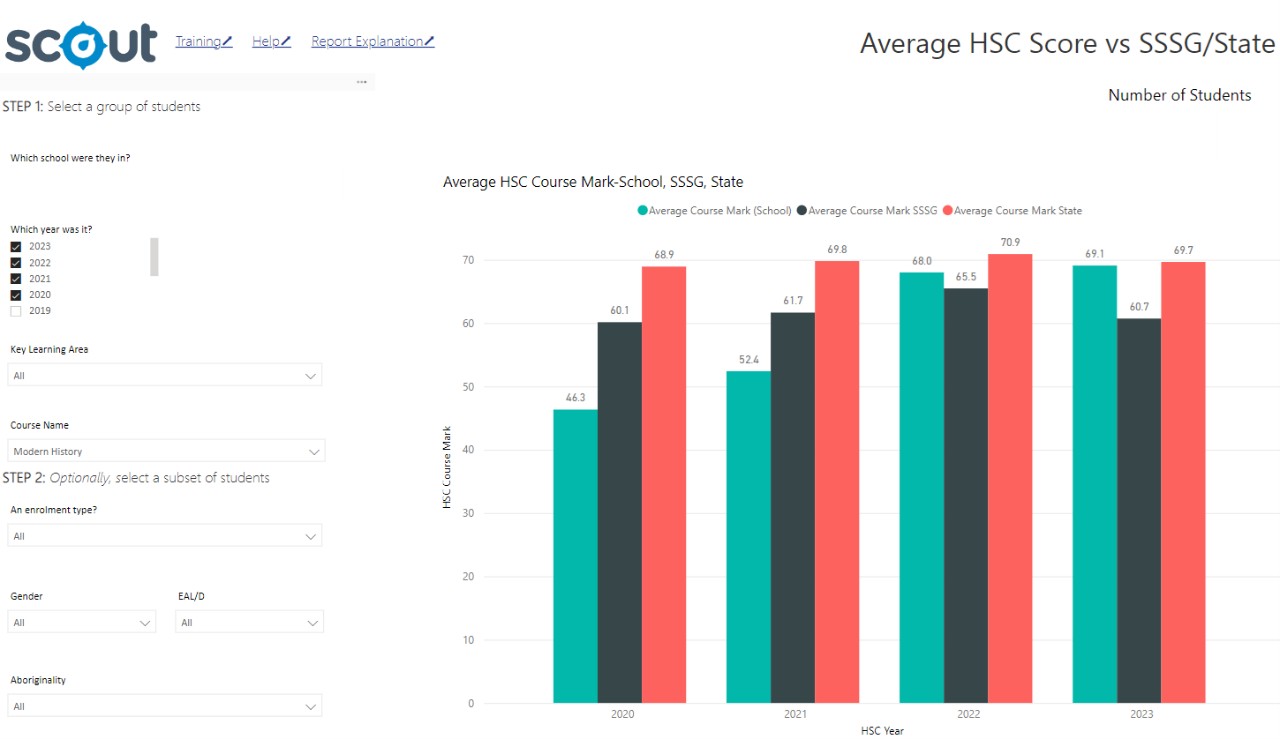

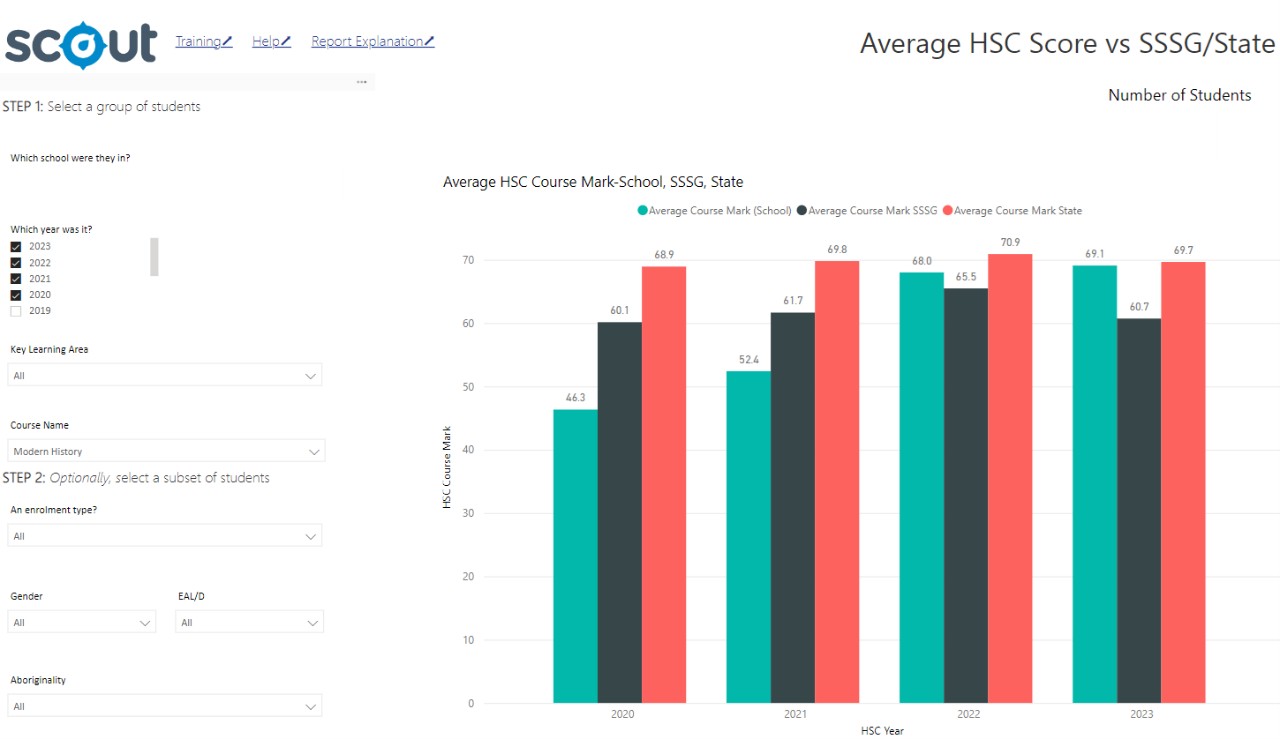

Using the Average HSC Score vs SSSG/State report

The Average HSC Score vs SSSG/State report provides a high-level view of the average HSC score for a school over time (by course) and compares the results to the statistically similar school group (SSSG) and the state.

Schools can use this report to assess their students' performance compared to their SSSG and identify areas of strength and areas to target for improvement. Trends in performance over time can also be observed.

Curriculum Head Teachers could consider:

- What programming, assessment or teaching practices took place that may have influenced the results or trends?

- How does each gender compare against the cohort?

- How does each equity group (e.g. Aboriginal and Torres Strait Islander students, English as an Additional Language/Dialect students) compare against the cohort?

Leadership teams could consider:

- What are the overall strengths?

- What are the overall areas for improvement?

- Where is curriculum differentiation needed?

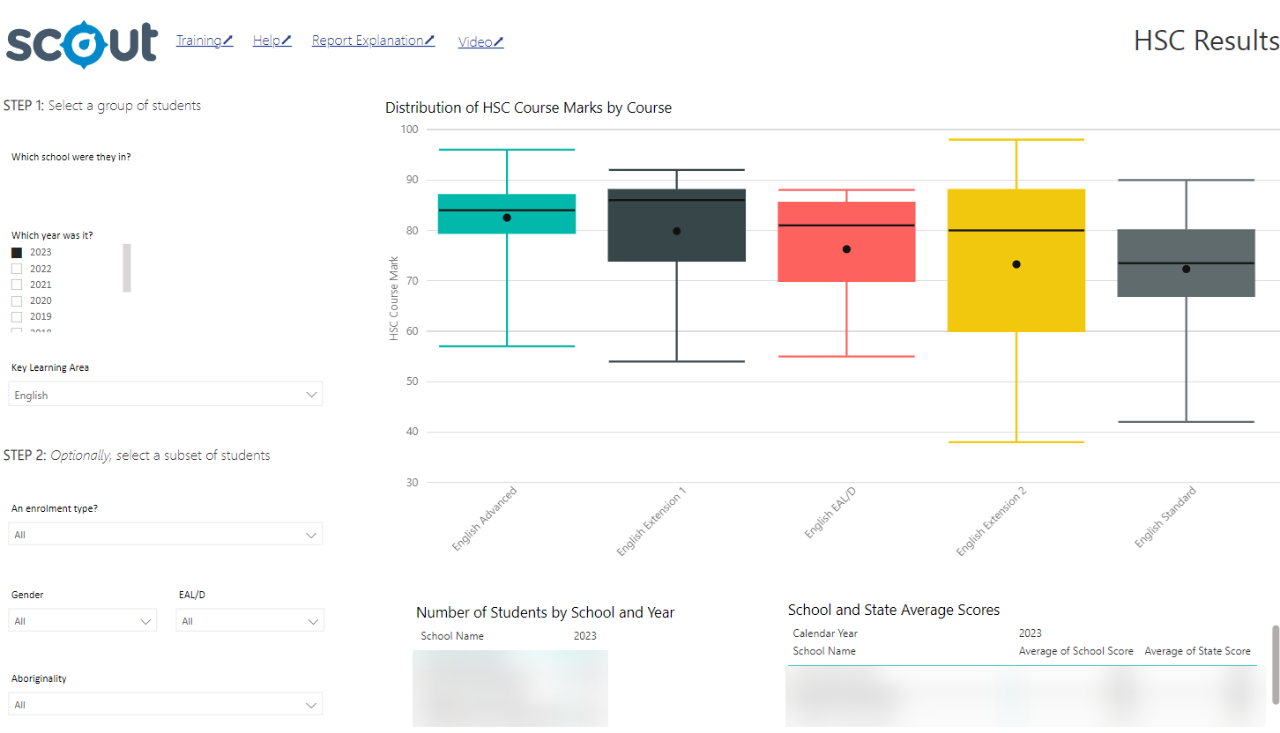

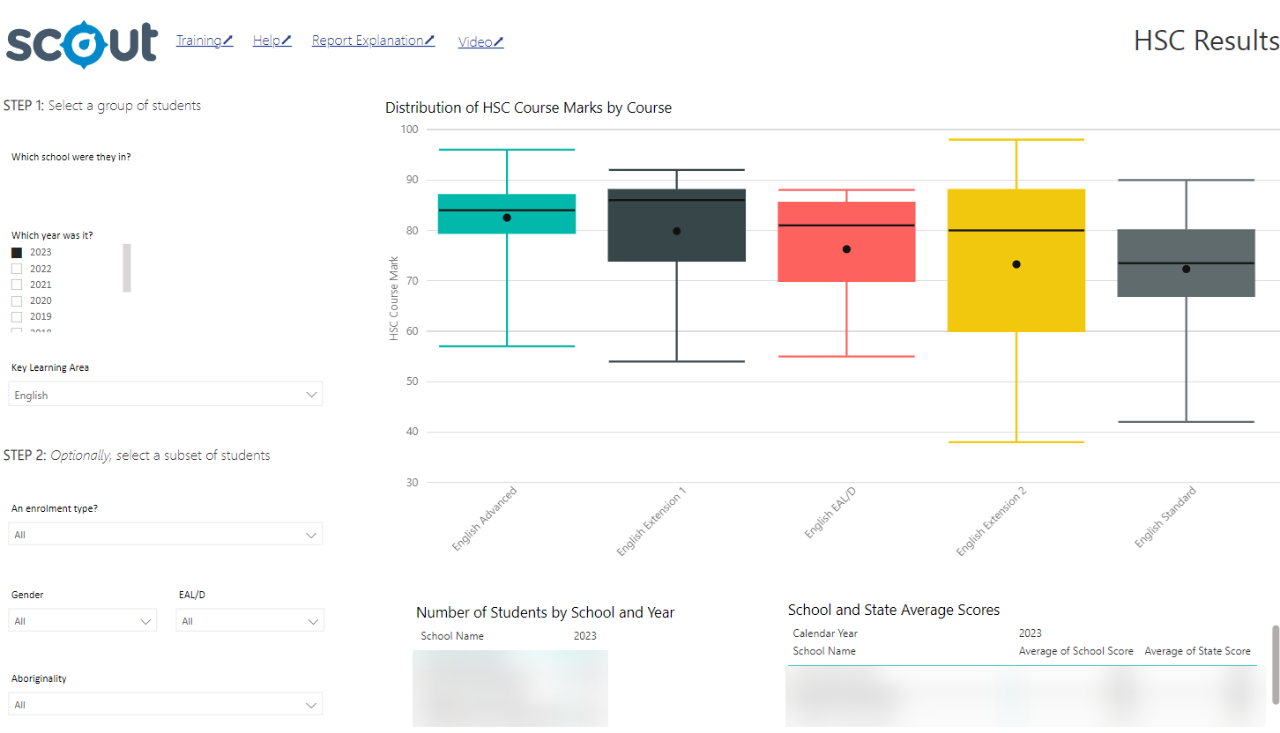

Using the HSC Scaled Results report

The first page of this report shows HSC performance across Key Learning Areas (KLAs) in the form of a box-and-whisker plot (or boxplot).

The second page of this report shows HSC results by Socio Economic Status (SES).

The third page of this report shows HSC results in Bands.

Page 1 – HSC Results (Box Plots)

Distribution of Scaled HSC Score by Course

This box-and-whisker chart shows the distribution of results for students in the selected calendar year and key learning area.

Hover over the chart to view the following metrics:

- # Samples - Number of students who sat the assessment in that course.

- Maximum - The highest result achieved in the course by a member of the cohort.

- Quartile 3 - The score that is greater than 75% of the scores achieved by the cohort.

- Median - The score in the middle of the school's distribution where 50% of the cohort scored above the score and 50% below.

- Average - The score that represents the central or typical value achieved by students in the course (sum of scores achieved divided by number of scores).

- Quartile 1 - The score that is greater than 25% of the scores achieved by the cohort.

- Minimum - The lowest score achieved in the course by a member of the cohort.

Curriculum Head Teachers could consider:

- What programming, assessment or teaching practices took place that may have influenced the trends?

- What do the course median and mean (and the difference between them) tell us?

- What does the performance attainment for the middle 50% of the cohort tell us? (And the top and bottom 25%?)

- What could the score distributions in the interquartile range tell us about internal assessment practices?

- Has there been a change in HSC band achievement?

- How does each gender compare against the cohort?

- How does each equity group (e.g. Aboriginal and Torres Strait Islander students, English as an Additional Language/Dialect students) compare against the cohort?

- How have results changed over time?

Leadership teams could consider:

- What are the overall strengths?

- What are the overall areas for improvement?

- Where is curriculum differentiation needed?

- What could the score distributions in the interquartile range inform us about internal assessment practices?

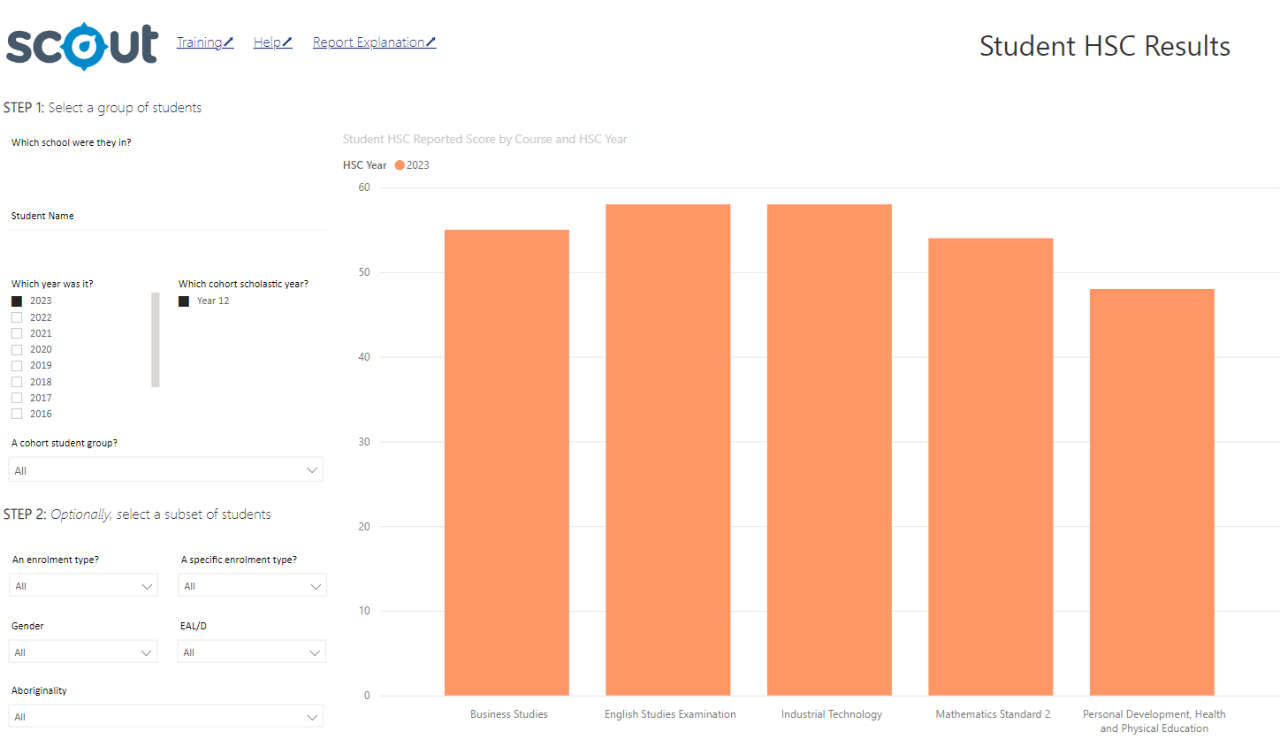

Using the Student HSC Results report

This report enables analysis for individual students. It can demonstrate attainment and value-add, both collectively and individually.

Relevant staff members (for example, wellbeing support) could consider:

- patterns, strengths and areas for improvement for students on an Individual Education Plan/Special Provisions

- patterns, strengths and areas for improvement for all EAL/D students

- patterns, strengths and areas for improvement for all Aboriginal and Torres Strait Islander students.

Tips and tools to help analyse HSC reports

Tip 1: Use data from multiple sources

Scout reports, combined with internal and external data sources, can help inform your analysis.

Sources of data include:

- School-based data

- NSW Education Standards Authority (NESA) student performance packages

- Post-school destination data through University Admission Centre (UAC) reports

- Average HSC score vs statistically similar school groups (SSSG)/State report in Scout

- HSC results report in Scout

- Student HSC Results report in Scout.

Tip 2: Break analysis areas up into specialised teams

At many schools, different teams focus on areas most pertinent to their work.

For example, leadership teams might focus on data at an aggregate level, identifying whole-school areas for improvement. Curriculum Head Teachers, alongside their staff, might focus on their subject areas. Careers and Transition teams could focus on post-school data, while multiple teams could investigate data broken down by equity groups and groups with special learning needs.

Tip 3: Provide screenshots of datasets and narrative to explain the findings

Many schools find it useful to provide screenshots of the datasets within Scout to staff, with an accompanying narrative to explain and discuss each dataset. Suggestions for next steps and discussions about the implications for future practice can be provided with each piece of narrative to support staff understanding.

Tip 4: Your analysis should include suggested next steps

Some areas may require additional conversations and further analysis to identify the trends' root causes. Examples of next steps might include:

- professional learning for staff in the delivery of HSC courses

- program modification

- curriculum differentiation

- milestones for future practice

- curriculum offerings to meet the needs of changing cohorts

- alignment of student attendance and performance outcomes

- more course information provided to students

- post-school transition planning

- establishing consistent school-based review procedures to evaluate current assessment tools

- whole-school approaches to assessment design that can meet the needs of all students.